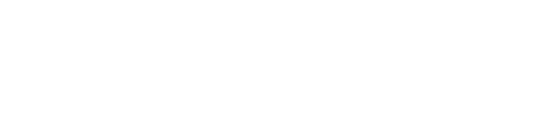

Data annotation, the meticulous process of labeling data for AI training, is often seen as a tedious back-end task. However, the quality of annotations directly impacts the performance and effectiveness of AI models.

To bridge this gap, Human-Centric Design (HCD) offers a powerful approach to develop data annotation tools that empower AI developers.

Why is it Important to use HCD for Data Annotation Tools?

Traditional data annotation tools prioritize functionality over user experience. This leads to interfaces that are unintuitive and ultimately hinder developer productivity and data quality. HCD flips this scenario by focusing on the human in the loop – the AI developer.

By understanding the developer’s needs, workflows, and pain points, HCD principles can be applied to create data annotation tools that are:

- Intuitive and User-Friendly: A clear interface with minimal learning curve allows developers to quickly grasp the annotation process and focus on labeling data.

- Streamlined Workflows: Tools should anticipate the developer’s next steps and automate repetitive tasks, minimizing manual effort and maximizing efficiency.

- Error Prevention and Quality Control: Features like built-in validation checks and annotation history can help catch errors early and maintain data consistency.

- Collaboration and Feedback: Enabling communication between developers and annotators (if used) fosters collaboration and facilitates quick resolution of ambiguities in the data.

- Cognitive Support: Tools that utilize active learning or suggest pre-populated labels can reduce cognitive load and accelerate the annotation process.

Benefits of HCD for Developers

HCD-driven data annotation tools empower AI developers in multiple ways:

- Increased Productivity: Streamlined workflows and intuitive interfaces save developers valuable time and effort.

- Improved Data Quality: Error prevention features and quality control mechanisms ensure high-quality training data for AI models.

- Reduced Frustration: A user-friendly experience minimizes frustration and fosters a more positive development environment.

- Enhanced Collaboration: Features that facilitate communication with annotators or other developers streamline the data pipeline.

- Faster Model Development: Improved efficiency in data annotation translates to faster model training and deployment cycles.

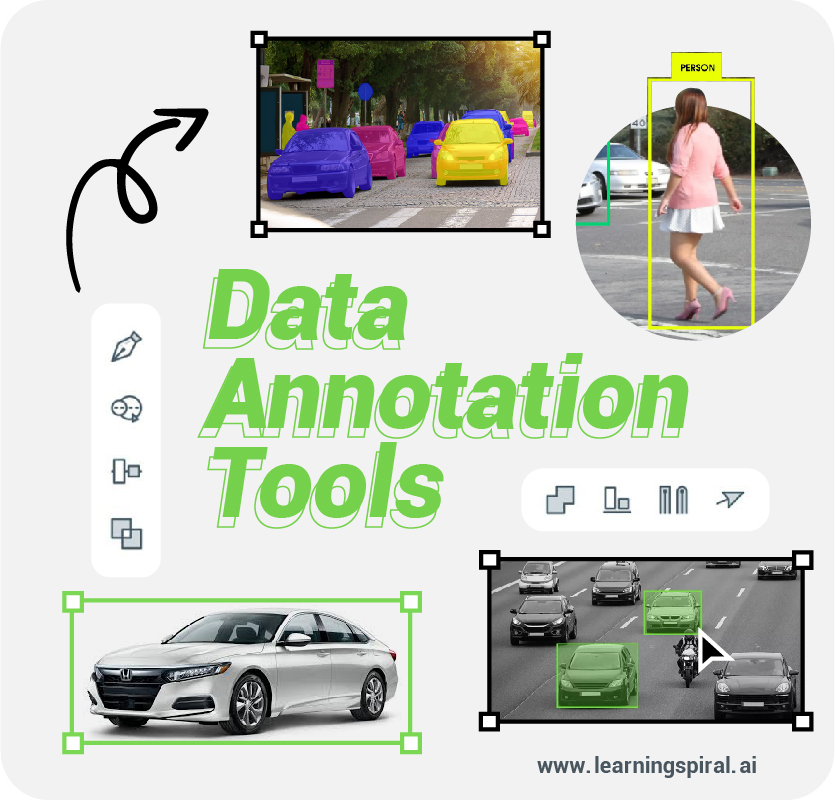

Examples of HCD in Action

Here are some practical examples of how HCD principles can be applied to data annotation tools:

- Keyboard Shortcuts: Providing keyboard shortcuts for frequently used actions allows for faster navigation and labeling.

- Batch Annotation: Enabling batch annotation for repetitive tasks like image tagging saves time and reduces potential errors.

- Visual Cues and Overlays: Highlighting specific areas of interest in data points through overlays or visual cues guides developers and improves labeling accuracy.

- Multiple Annotation Views: Offering different views of the data (e.g., text, image, video) caters to different developer preferences and helps visualize complex data interactions.

- Active Learning: Implementing active learning algorithms can suggest ambiguous data points for annotation, reducing the overall labeling workload for developers.

Conclusion

By adopting a human-centric approach, data annotation tools can be transformed from a bottleneck to a powerful asset in AI development. HCD empowers developers to focus on the core tasks of model creation while ensuring high-quality data for training. As AI development continues to evolve, HCD will be instrumental in creating annotation tools that empower developers and accelerate the creation of robust, reliable, and ultimately, beneficial AI models.