Have you ever smiled at your phone and seen it respond with a cheerful emoji or suggest a related sticker? That’s not a coincidence — it’s AI at work, recognizing your facial expression and responding accordingly. This is made possible through facial expression annotation, a key process in teaching...

In the age of artificial intelligence (AI), data is the key to unlocking the full potential of machine learning models. However, raw data on its own is insufficient; it needs to be processed, labeled, and annotated to ensure that AI systems can understand and act upon it. This is...

The agriculture sector is undergoing a transformation with the integration of artificial intelligence (AI) and machine learning, driving efficiency and sustainability. One of the key technologies that are enabling this revolution is data annotation. At Learning Spiral AI, we are at the forefront of providing high-quality data annotation services,...

In the rapidly evolving retail landscape, artificial intelligence (AI) is playing an increasingly pivotal role in transforming the shopping experience. One of the most impactful areas of AI integration is image annotation, which is helping retailers enhance both the customer experience and operational efficiency. As AI-driven technologies advance, data...

In the age of artificial intelligence (AI), the ability to understand and process human language has become a crucial aspect of technological advancements. Text data annotation plays a vital role in enhancing AI communication and understanding, enabling machines to interpret, respond, and engage with human language in ways that...

In the rapidly evolving world of Artificial Intelligence (AI), the accuracy and effectiveness of machine learning models heavily rely on the quality of the data used to train them. While algorithms and automation play a significant role, one crucial factor that cannot be overlooked is the human element in...

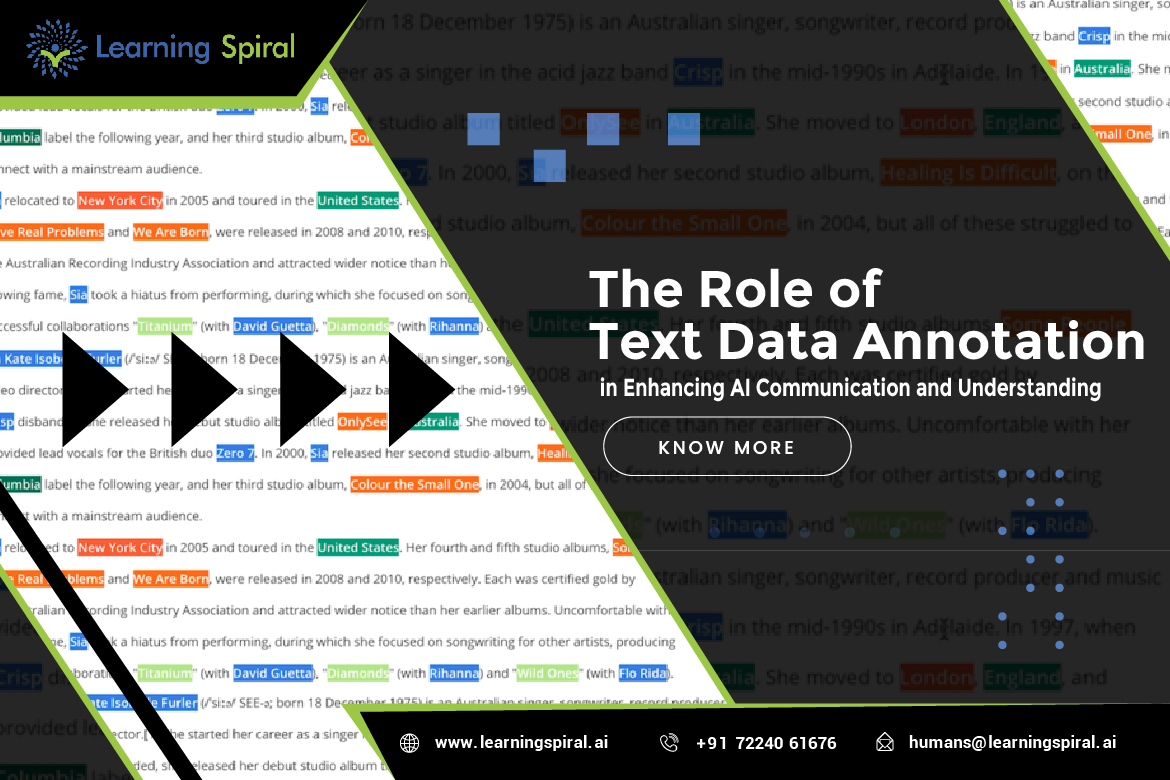

Lidar (Light Detection and Ranging) technology plays a pivotal role in the development of autonomous vehicles, enabling them to navigate their environment with high precision. For autonomous vehicles, the ability to detect and classify objects such as pedestrians, other vehicles, and obstacles is crucial to ensuring safety and reliability....

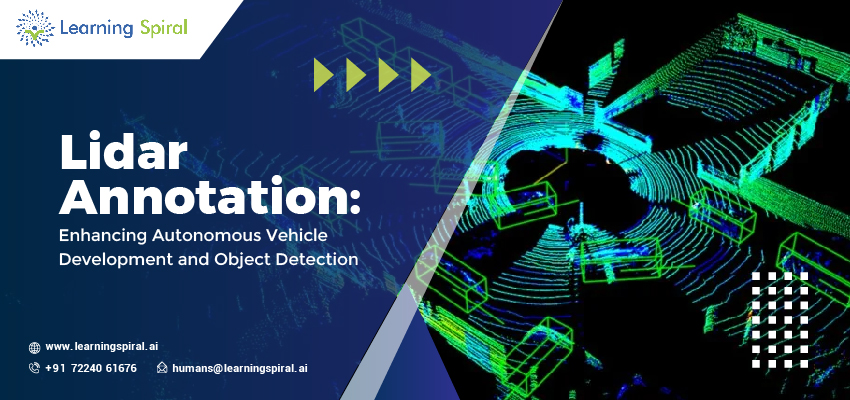

Aerial surveillance has revolutionized the way industries like agriculture, defense, urban planning, and environmental monitoring collect data. The use of drones and satellites to capture high-resolution images from the sky offers unparalleled insight, but these images need to be accurately annotated for effective analysis. Image annotation for aerial imagery...

Reinforcement Learning from Human Feedback (RLHF) is revolutionizing AI by aligning models with human intent, improving safety, accuracy, and ethical decision-making. This technique plays a pivotal role in fine-tuning AI models, enabling them to adapt to complex real-world scenarios while minimizing biases. However, the quality of labeled data used...